In [ ]:

# write your code

# case 1

Task 2. Is there any difference? (2 points)¶

In some cases, sequential application of PCA and Logistic Regression (LR) leads to the very same separating hyperplane as just application LR (let us denote it as $PCA + LR \sim LR$). In such cases there is a specific relation R between the projection hyperplane of PCA and separating hyperplane of LR.

What is the relation R? Describe it and explain why R is necessary and sufficient for $PCA + LR \sim LR$.

Write your answer here.

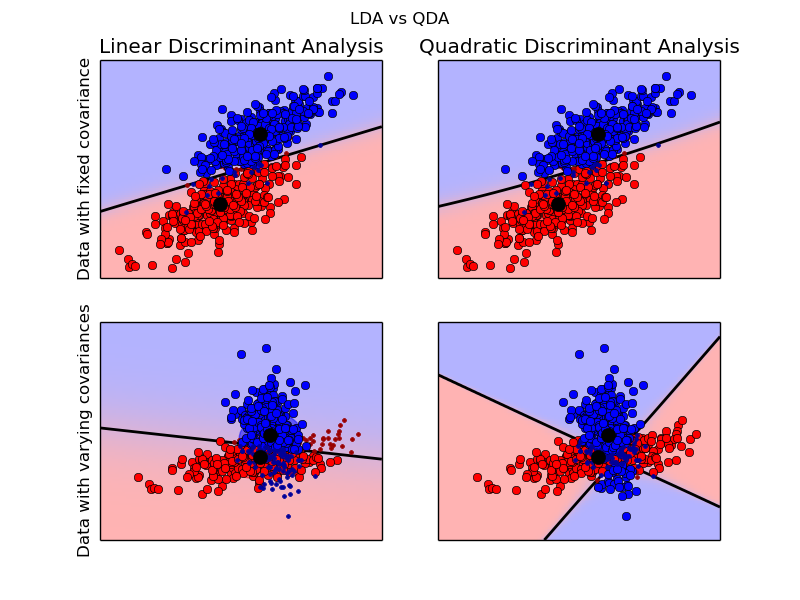

Task 3. PCA, LDA and QDA (1 point)¶

Provide an example when both PCA followed by LDA and LDA alone work worse than QDA. Provide a theoretical explanation of the proposed case.

In [ ]:

# write your code here

Task 4. PCA, LDA, QDA and...PCA (4 points)¶

Provide an example when both PCA followed by LDA and LDA alone have accuracy on the valid set < 100%, whereas QDA has accuracy on valid set = 100% and:

- PCA followed by QDA has accuracy on valid set < 100% (1 pt)

- PCA followed by QDA has accuracy on valid set = 100% (3 pts)

In [ ]:

# write your code here